Varnish Cache (commonly referred to as Varnish) is an open-source, powerful, and fast reverse-proxy HTTP accelerator with modern architecture and flexible configuration language. Being a reverse proxy simply means it is software that you can deploy in front of your web server (which is the origin server or backend) such as Nginx, to receive clients’ HTTP requests and forward them to the origin server for processing. And it delivers the response from the origin server to clients.

Varnish acts as a middleman between Nginx and clients but with some performance benefits. Its main purpose is to make your applications load faster, by working as a caching engine. It receives requests from clients and forwards them to the backend once to cache the requested content (store files and fragments of files in memory). Then all future requests for exactly similar content will be served from the cache.

This makes your web applications load faster and indirectly improves your web server’s overall performance because Varnish will serve content from memory instead of Nginx processing files from the storage disk.

Apart from caching, Varnish also has several other use cases including an HTTP request router, and load balancer, web application firewall, and more.

The varnish is configured using the highly extensible built-in Varnish Configuration Language (VCL) which enables you to write policies on how incoming requests should be handled. You can use it to build customized solutions, rules, and modules.

In this article, we will go through the steps to install the Nginx web server and Varnish Cache 6 on a fresh CentOS 8 or RHEL 8 server. RHEL 8 users should make sure they enable redhat subscription.

To set up, a complete LEMP stack instead of installing the Nginx web server alone, check out the following guides.

Step 1: Install Nginx Web Server on CentOS/RHEL 8

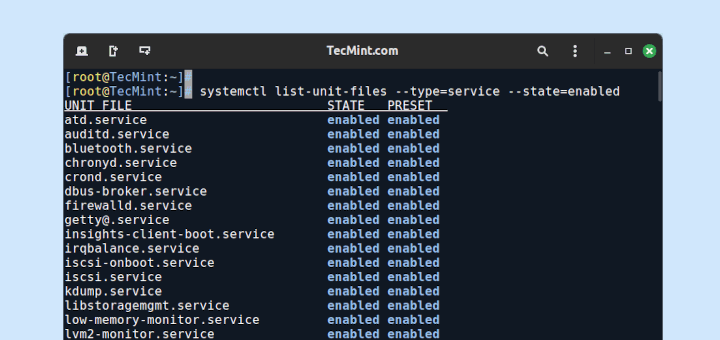

1. The CentOS/RHEL 8 ships with the latest version of Nginx web server software, so we will install it from the default repository using the following dnf commands.

# dnf update # dnf install nginx

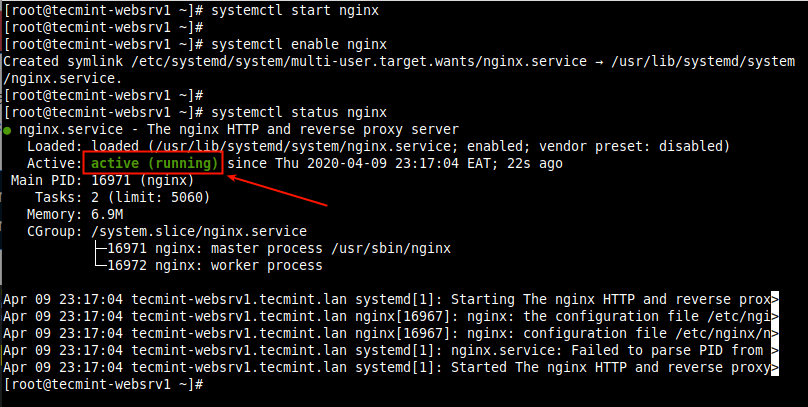

2. Once Nginx installed, you need to start, enable and verify the status using the following systemctl commands.

# systemctl start nginx # systemctl enable nginx # systemctl status nginx

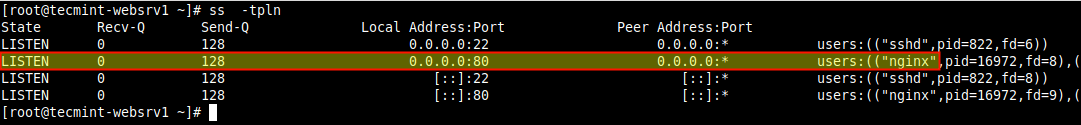

3. If you are a little curious, you can also check the Nginx TCP socket, which runs on port 80 by default, using the following ss command.

# ss -tpln

4. If you are running the firewall on the system, make sure to update the firewall rules to allow requests to a web server.

# firewall-cmd --zone=public --permanent --add-service=http # firewall-cmd --reload

Step 2: Installing Varnish Cache 6 on CentOS/RHEL 8

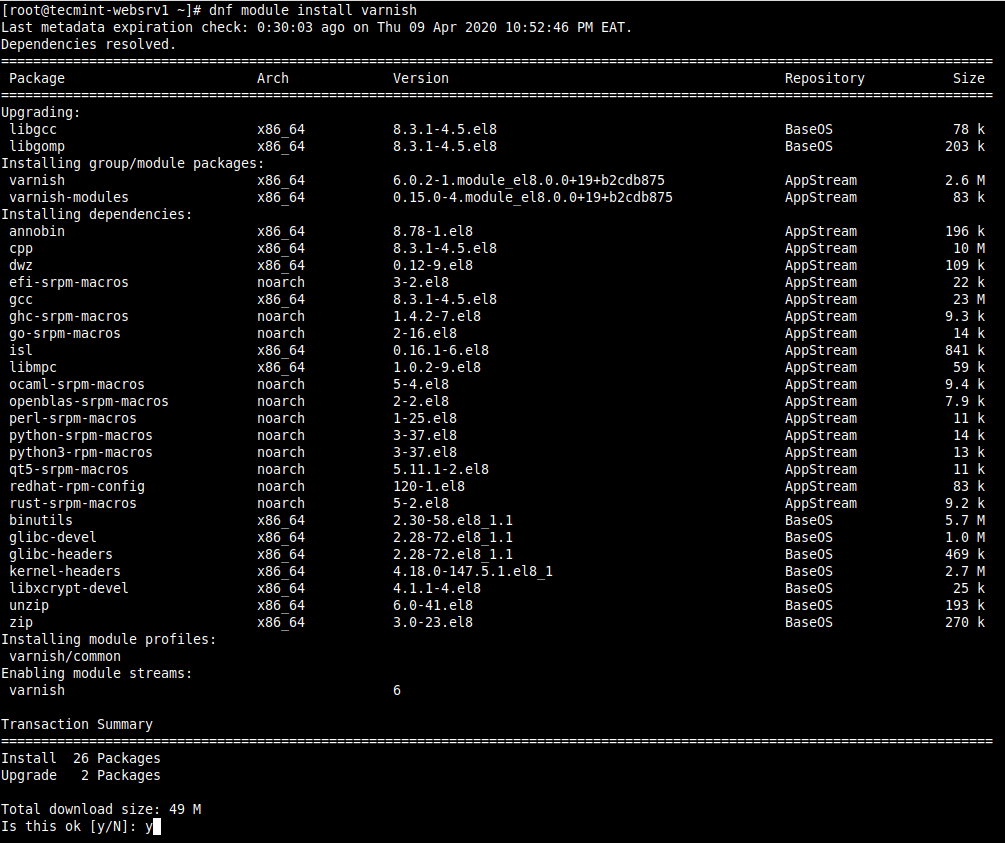

5. The CentOS/RHEL 8 provides a Varnish Cache DNF module by default which contains version 6.0 LTS (Long Term Support).

To install the module, run the following command.

# dnf module install varnish

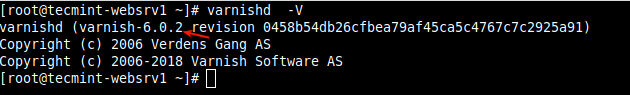

6. Once the module installation is complete, you can confirm the version of Varnish installed on your system.

# varnishd -V

7. After installing Varnish Cache, the main executable command installed under /usr/sbin/varnishd and varnish configuration files are located in /etc/varnish/.

The file /etc/varnish/default.vcl is the main varnish configuration file written using VCL and /etc/varnish/secret is the varnish secret file.

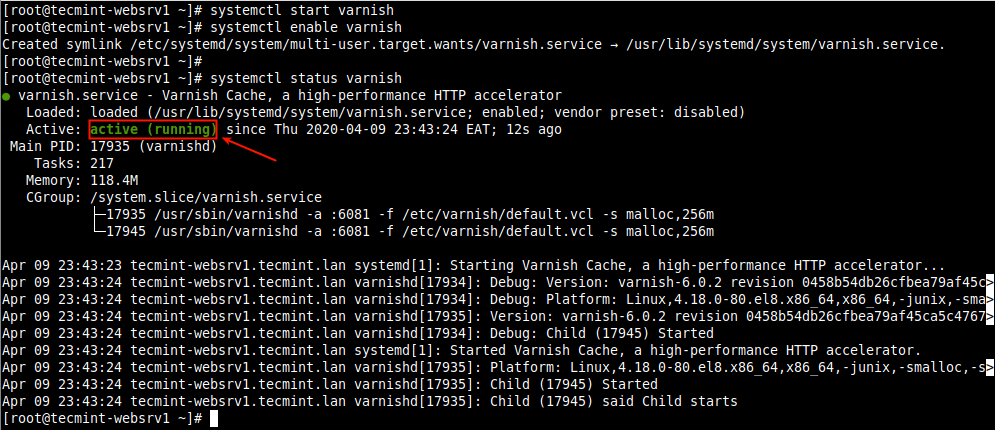

8. Next, start the Varnish service, enable it to auto-start during system boot and confirm that it is up and running.

# systemctl start varnish # systemctl enable varnish # systemctl status varnish

Step 3: Configuring Nginx to Work with Varnish Cache

9. In this section, we will show how to configure Varnish Cache to run in front of Nginx. By default Nginx listens on port 80, normally every server block (or virtual host) is configured to listen on this port.

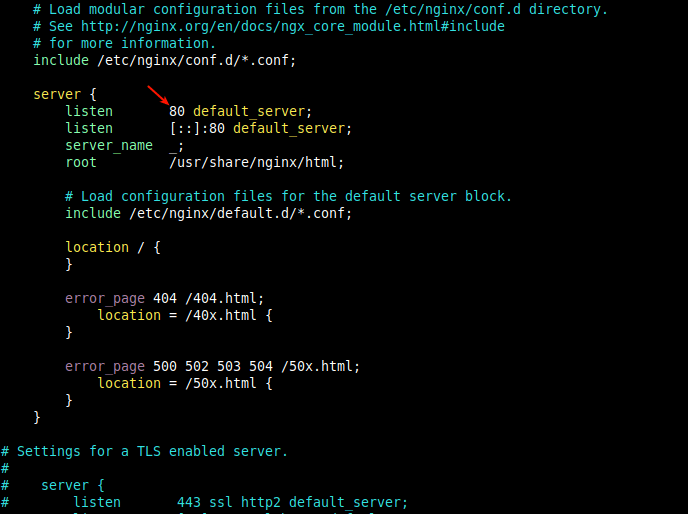

For example, take a look at the default nginx server block configured in the main configuration file (/etc/nginx/nginx.conf).

# vi /etc/nginx/nginx.conf

Look for the server block section as shown in the following screenshot.

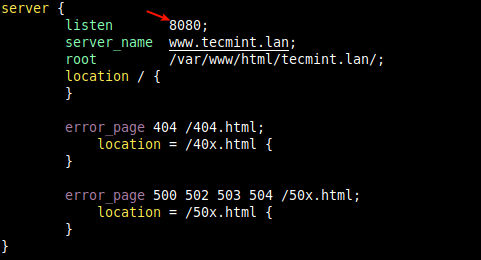

10. To run Varnish in front of Nginx, you should change the default Nginx port from 80 to 8080 (or any other port of your choice).

This should be done in all future server block configuration files (usually created under /etc/nginx/conf.d/) for sites or web applications that you want to serve via Varnish.

For example, the server block for our test site tecmint.lan is /etc/nginx/conf.d/tecmint.lan.conf and has the following configuration.

server {

listen 8080;

server_name www.tecmint.lan;

root /var/www/html/tecmint.lan/;

location / {

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

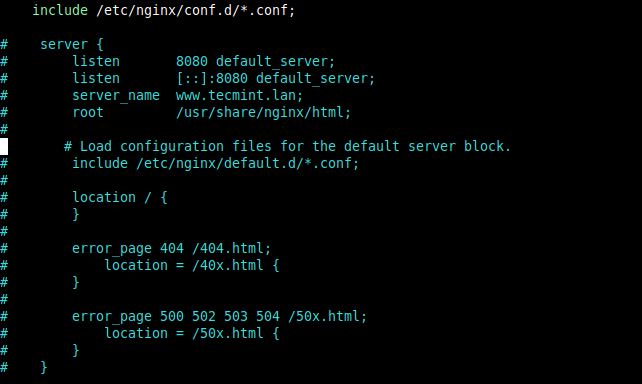

Important: Remember to disable the default server block by commenting out its configuration section in the /etc/nginx/nginx.conf file as shown in the following screenshot. This enables you to start running other websites/applications on your server, otherwise, Nginx will always direct requests to the default server block.

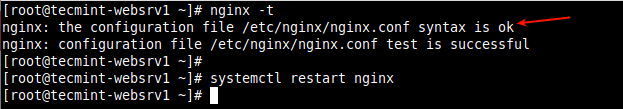

11. Once the configuration complete, check the configuration file for any errors and restart the Nginx service to apply recent changes.

# nginx -t # systemctl restart nginx

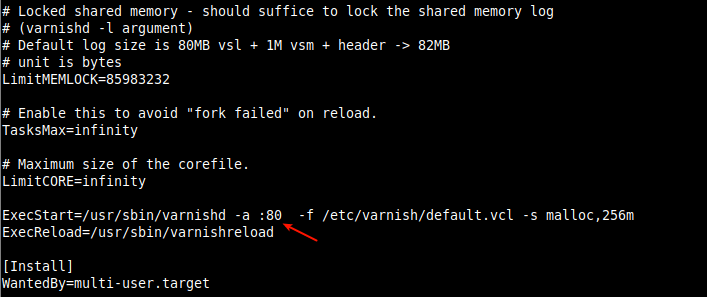

12. Next, to receive HTTP requests from clients, we need to configure Varnish to run on port 80. Unlike on earlier versions of Varnish Cache where this change was made in the Varnish environment file (which is now deprecated), in version 6.0 and above.

We need to make the required change in the Varnish service file. Run the following command to open the appropriate service file for editing.

# systemctl edit --full varnish

Find the following line and change the value of the -a switch, which specifies the listen address and port. Set the port to 80 as shown in the following screenshot.

Note if you do not specify an address, varnishd will listen on all available IPv4 and IPv6 interfaces active on the server.

ExecStart=/usr/sbin/varnishd -a :80 -f /etc/varnish/default.vcl -s malloc,256m

Save the changes in the file and exit.

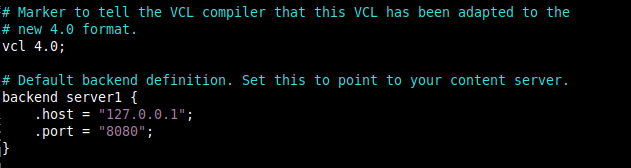

13. Next, you need to define the backend server that Varnish will visit to fetch content from. This is done in the Varnish main configuration file.

# vi /etc/varnish/default.vcl

Look for the default backend configuration section and change the string “default” to server1 (or any name of your choice to represent your origin server). Then set the port to 8080 (or the Nginx listen port you defined in your server block).

backend server1 {

.host = "127.0.0.1";

.port = "8080";

}

For this guide, we are running Varnish and Nginx on the same server. If your Nginx web server is running on a different host. For example, another server with address 10.42.0.247, then set .host parameter as shown.

backend server1 {

.host = "10.42.0.247";

.port = "8080";

}

Save the file and close it.

14. Next, you need to reload the systemd manager configuration because of the recent changes in the Varnish service file, then restart the Varnish service to apply the changes as follows.

# systemctl daemon-reload # systemctl restart varnish

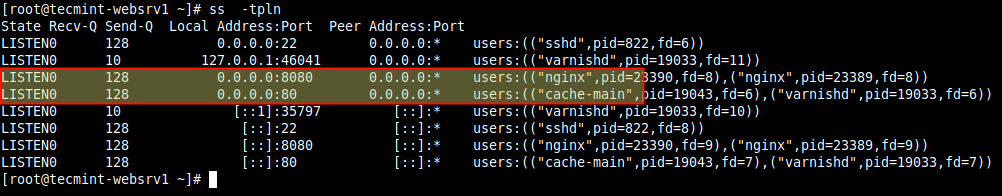

15. Now confirm that Nginx and Varnish are listening on the configured TCP sockets.

# ss -tpln

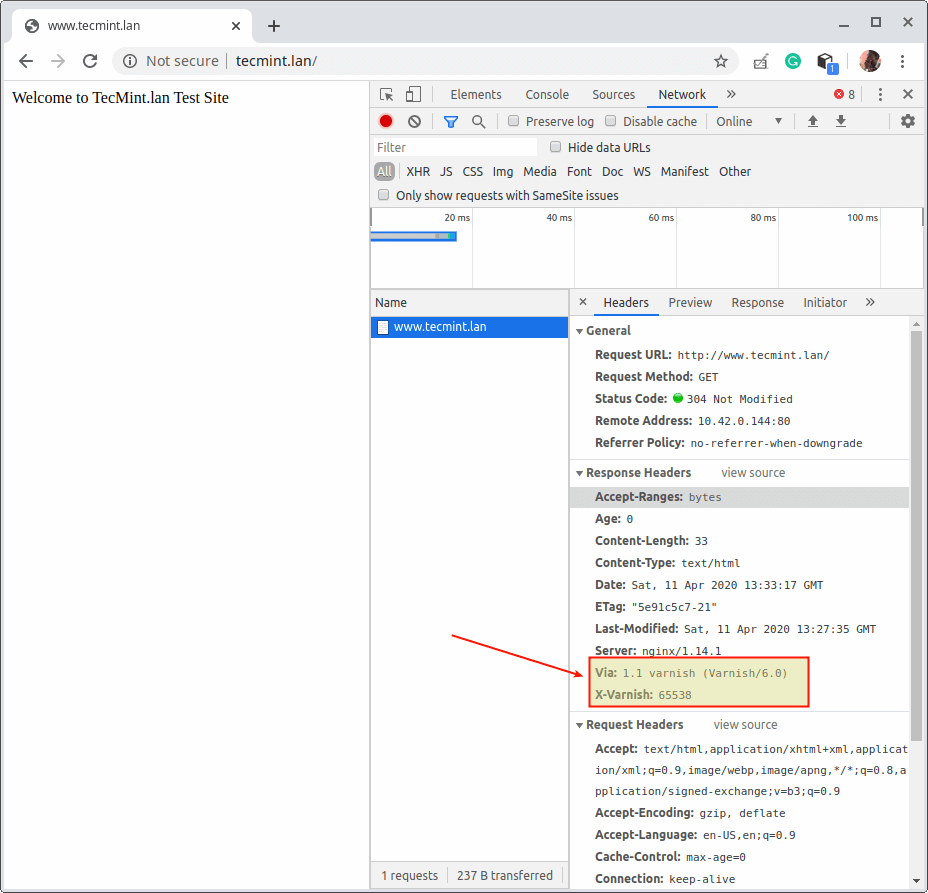

Step 4: Testing Nginx Varnish Cache Setup

16. Next, verify the web pages are being served via Varnish Cache as follows. Open a web browser and navigate using the server IP or FDQN as shown in the following screenshot.

http://www.tecmin.lan OR http://10.42.0.144

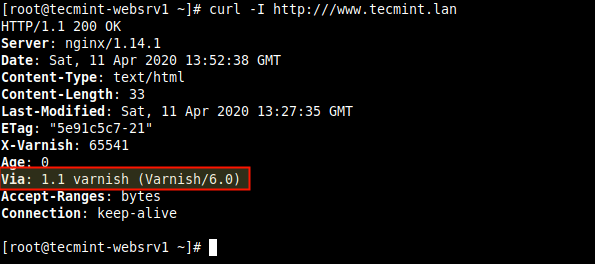

17. Alternatively, use the curl command as shown. Use your server’s IP address or website’s FQDN or use 127.0.0.1 or localhost if you are testing locally.

# curl -I http:///www.tecmint.lan

Useful Varnish Cache Administration Utilities

18. In this final section, we will briefly describe some of the useful utility programs that Varnish Cache ships with, that you can use to control varnishd, access in-memory logs and overall statistics and more.

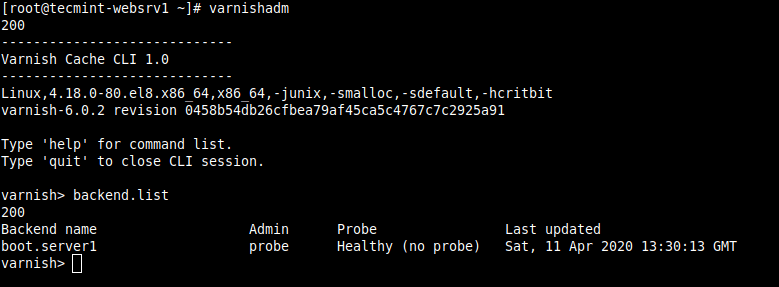

varnishadm

varnishadm a utility to control a running Varnish instance. It establishes a CLI connection to varnishd. For example, you can use it to list configured backends as shown in the following screenshot (read man varnishadm for more information).

# varnishadm varnish> backend.list

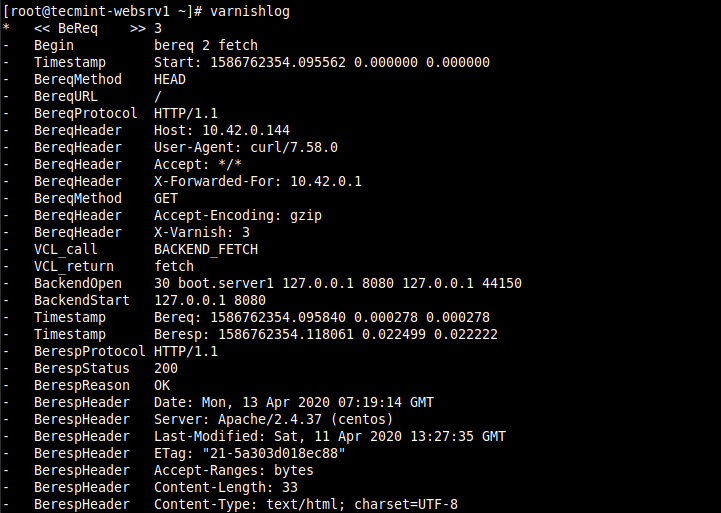

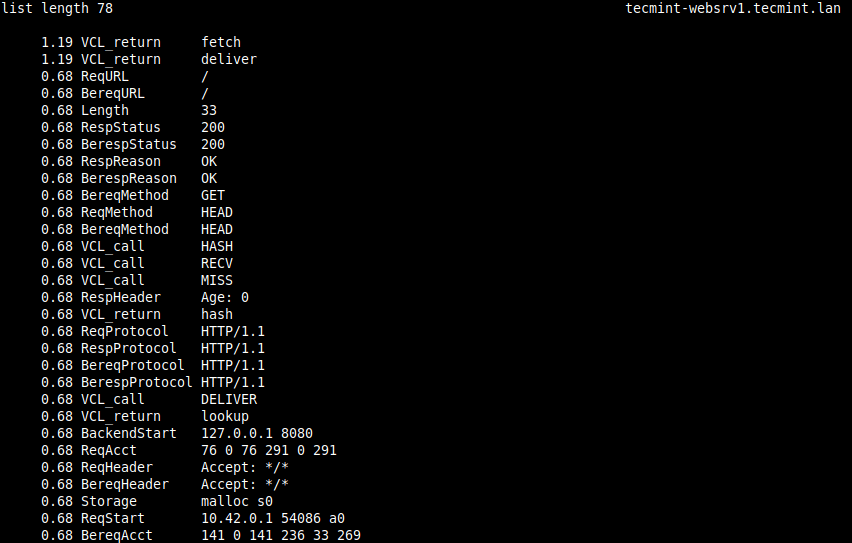

varnishlog

The varnishlog utility provides access to request-specific data. It offers information about specific clients and requests (read man varnishlog for more information).

# varnishlog

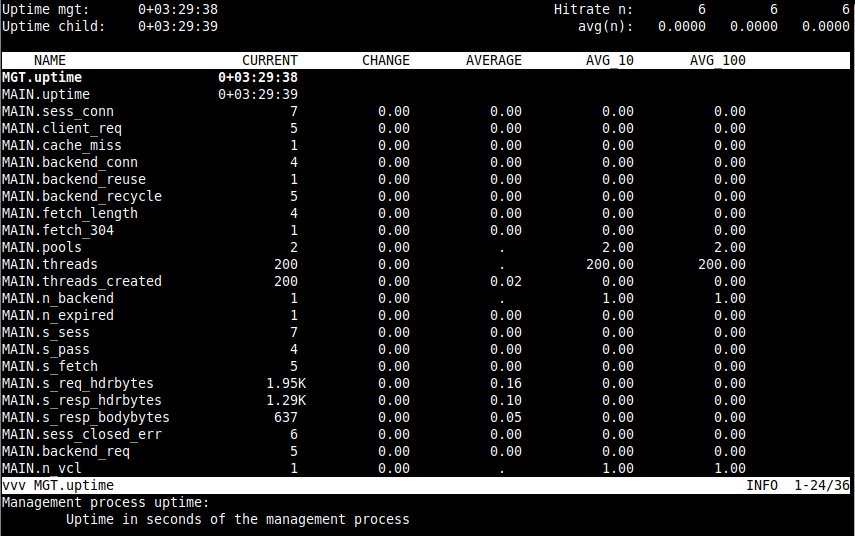

varnishstat

A varnishstat also known as varnish statistics, which gives you a glance at Varnish’s current performance by providing access to in-memory statistics such as cache hits and misses, information about the storage, threads created, deleted objects (read man varnishstat for more information).

# varnishstat

varnishtop

A varnishtop utility reads the shared memory logs and presents a continuously updated list of the most commonly occurring log entries (read man varnishtop for more information).

# varnishtop

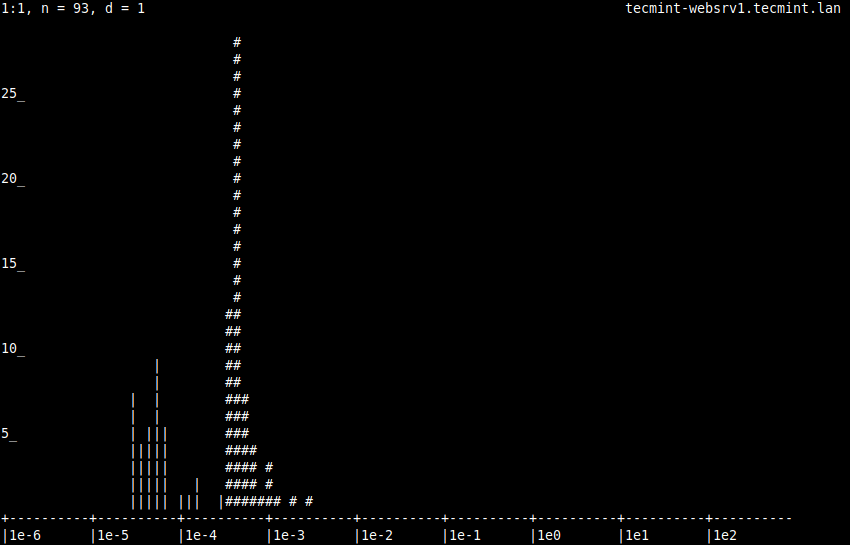

varnishhist

A varnishhist (varnish history) utility parses the varnish logs and outputs a continuously updated histogram showing the distribution of the last n requests by their processing (read man varnishhist for more information).

# varnishhist

That’s all! In this guide, we have shown how to install Varnish Cache and run it in front of the Nginx HTTP server to accelerate web content delivery in CentOS/RHEL 8.

Any thoughts or questions about this guide can be shared using the feedback form below. For more information, read the Varnish Cache documentation.

The main drawback of Varnish Cache is its lack of native support for HTTPS. To enable HTTPS on your web site/application, you need to configure an SSL/TLS termination proxy to work in conjunction with Varnish Cache to protect your site. In our next article, we will show how to enable HTTPS for Varnish Cache using Hitch on CentOS/RHEL 8.