SARG is an open source tool that allows you to analyse the squid log files and generates beautiful reports in HTML format with informations about users, IP addresses, top accessed sites, total bandwidth usage, elapsed time, downloads, access denied websites, daily reports, weekly reports and monthly reports.

The SARG is very handy tool to view how much internet bandwidth is utilized by individual machines on the network and can watch on which websites the network’s users are accessing.

In this article I will guide you on how to install and configure SARG – Squid Analysis Report Generator on RHEL/CentOS/Fedora and Debian/Ubuntu/Linux Mint systems.

Installing Sarg – Squid Log Analyzer in Linux

I assume that you already installed, configured and tested Squid server as a transparent proxy and DNS for the name resolution in caching mode. If not, please install and configure them first before moving further installation of Sarg.

Important: Please remember without the Squid and DNS setup, no use of installing sarg on the system it will won’t work at all. So, it’s a request to install them first before proceeding further to Sarg installation.

Follow these guides to install DNS and Squid in your Linux systems:

Install Cache-Only DNS Server

- Install Cache Only DSN Server in RHEL/CentOS 7

- Install Cache Only DSN Server in RHEL/CentOS 6

- Install Cache Only DSN Server in Ubuntu and Debian

Install Squid as Transparent Proxy

- Setting Up Squid Transparent Proxy in Ubuntu and Debian

- Install Squid Cache Server on RHEL and CentOS

Step 1: Installing Sarg from Source

The ‘sarg‘ package by default not included in RedHat based distributions, so we need to manually compile and install it from source tarball. For this, we need some additional pre-requisites packages to be installed on the system before compiling it from source.

On RedHat/CentOS/Fedora

# yum install –y gcc gd gd-devel make perl-GD wget httpd

Once you’ve installed all the required packages, download the latest sarg source tarball or you may use the following wget command to download and install it as shown below.

# wget http://liquidtelecom.dl.sourceforge.net/project/sarg/sarg/sarg-2.3.10/sarg-2.3.10.tar.gz # tar -xvzf sarg-2.3.10.tar.gz # cd sarg-2.3.10 # ./configure # make # make install

On Debian/Ubuntu/Linux Mint

On Debian based distributions, sarg package can be easily install from the default repositories using apt-get package manager.

$ sudo apt-get install sarg

Step 2: Configuring Sarg

Now it’s time to edit some parameters in SARG main configuration file. The file contains lots of options to edit, but we will only edit required parameters like:

- Access logs path

- Output directory

- Date Format

- Overwrite report for the same date.

Open sarg.conf file with your choice of editor and make changes as shown below.

# vi /usr/local/etc/sarg.conf [On RedHat based systems]

$ sudo nano /etc/sarg/sarg.conf [On Debian based systems]

Now Uncomment and add the original path to your squid access log file.

# sarg.conf # # TAG: access_log file # Where is the access.log file # sarg -l file # access_log /var/log/squid/access.log

Next, add the correct Output directory path to save the generate squid reports in that directory. Please note, under Debian based distributions the Apache web root directory is ‘/var/www‘. So, please be careful while adding correct web root paths under your Linux distributions.

# TAG: output_dir # The reports will be saved in that directory # sarg -o dir # output_dir /var/www/html/squid-reports

Set the correct date format for reports. For example, ‘date_format e‘ will display reports in ‘dd/mm/yy‘ format.

# TAG: date_format # Date format in reports: e (European=dd/mm/yy), u (American=mm/dd/yy), w (Weekly=yy.ww) # date_format e

Next, uncomment and set Overwrite report to ‘Yes’.

# TAG: overwrite_report yes|no # yes - if report date already exist then will be overwritten. # no - if report date already exist then will be renamed to filename.n, filename.n+1 # overwrite_report yes

That’s it! Save and close the file.

Step 3: Generating Sarg Report

Once, you’ve done with the configuration part, it’s time to generate the squid log report using the following command.

# sarg -x [On RedHat based systems]

# sudo sarg -x [On Debian based systems]

Sample Output

[root@localhost squid]# sarg -x SARG: Init SARG: Loading configuration from /usr/local/etc/sarg.conf SARG: Deleting temporary directory "/tmp/sarg" SARG: Parameters: SARG: Hostname or IP address (-a) = SARG: Useragent log (-b) = SARG: Exclude file (-c) = SARG: Date from-until (-d) = SARG: Email address to send reports (-e) = SARG: Config file (-f) = /usr/local/etc/sarg.conf SARG: Date format (-g) = USA (mm/dd/yyyy) SARG: IP report (-i) = No SARG: Keep temporary files (-k) = No SARG: Input log (-l) = /var/log/squid/access.log SARG: Resolve IP Address (-n) = No SARG: Output dir (-o) = /var/www/html/squid-reports/ SARG: Use Ip Address instead of userid (-p) = No SARG: Accessed site (-s) = SARG: Time (-t) = SARG: User (-u) = SARG: Temporary dir (-w) = /tmp/sarg SARG: Debug messages (-x) = Yes SARG: Process messages (-z) = No SARG: Previous reports to keep (--lastlog) = 0 SARG: SARG: sarg version: 2.3.7 May-30-2013 SARG: Reading access log file: /var/log/squid/access.log SARG: Records in file: 355859, reading: 100.00% SARG: Records read: 355859, written: 355859, excluded: 0 SARG: Squid log format SARG: Period: 2014 Jan 21 SARG: Sorting log /tmp/sarg/172_16_16_55.user_unsort ......

Note: The ‘sarg -x’ command will read the ‘sarg.conf‘ configuration file and takes the squid ‘access.log‘ path and generates a report in html format.

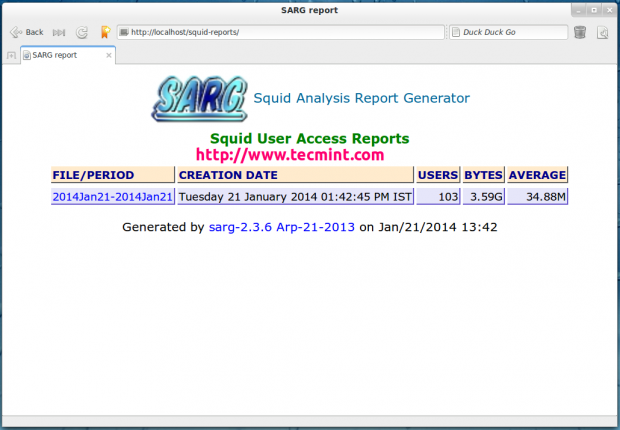

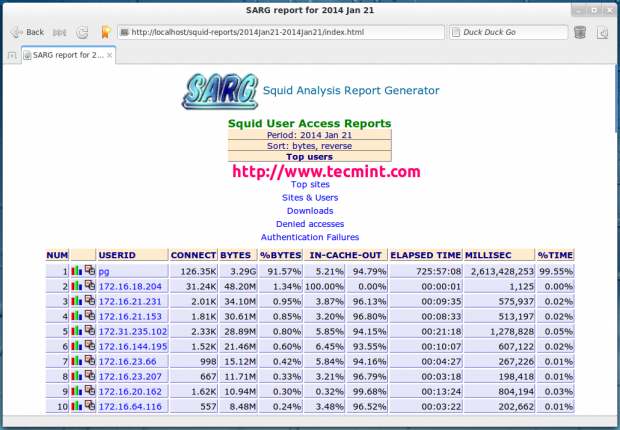

Step 4: Assessing Sarg Report

The generated reports placed under ‘/var/www/html/squid-reports/‘ or ‘/var/www/squid-reports/‘ which can be accessed from the web browser using the address.

http://localhost/squid-reports OR http://ip-address/squid-reports

Sarg Main Window

Specific Date

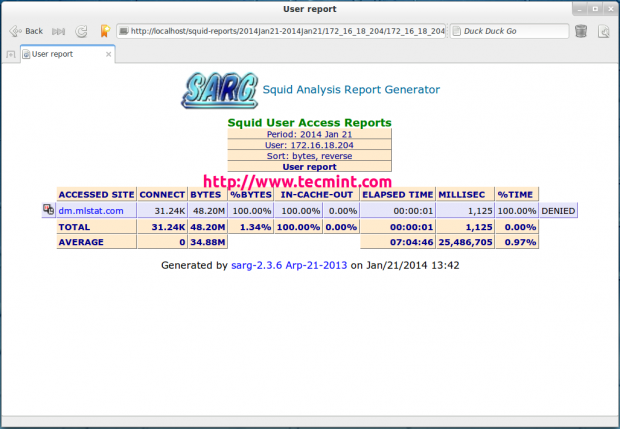

User Report

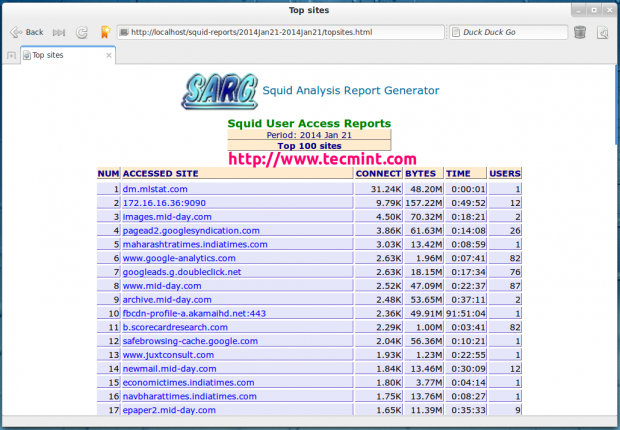

Top Accessed Sites

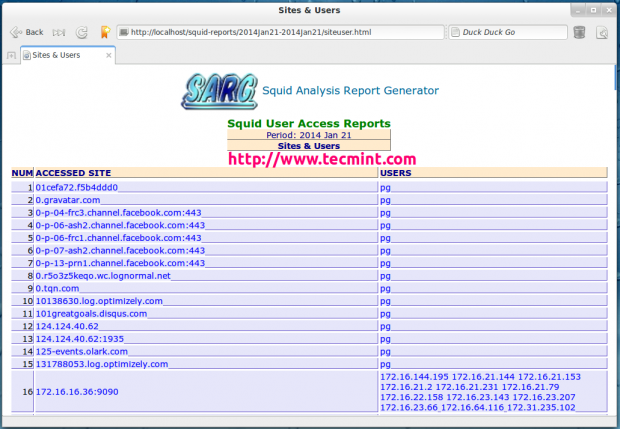

Top Sites and Users

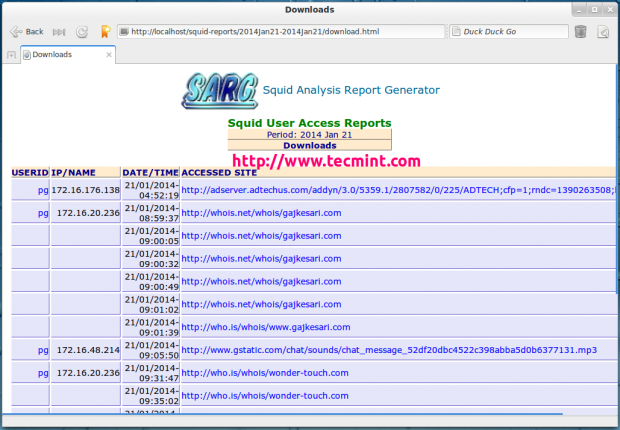

Top Downloads

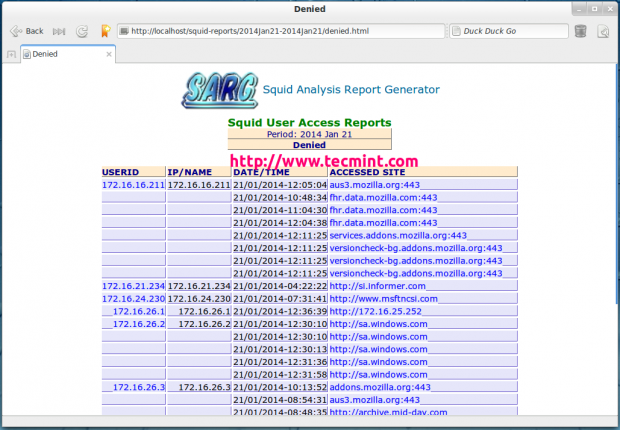

Denied Access

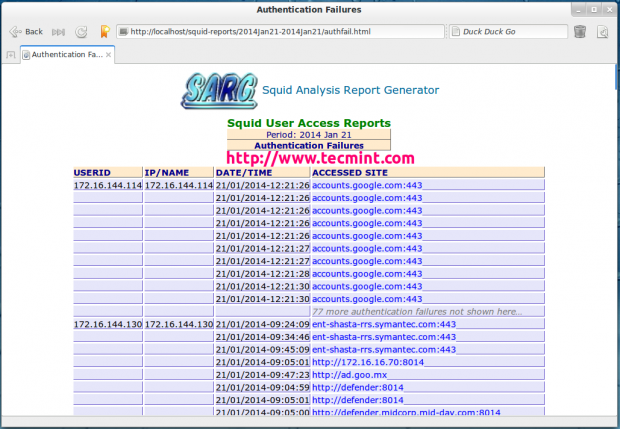

Authentication Failures

Step 5: Automatic Generating Sarg Report

To automate the process of generating sarg report in given span of time via cron jobs. For example, let’s assume you want to generate reports on hourly basis automatically, to do this, you need to configure a Cron job.

# crontab -e

Next, add the following line at the bottom of the file. Save and close it.

* */1 * * * /usr/local/bin/sarg -x

The above Cron rule will generate SARG report every 1 hour.

Reference Links

That’s it with SARG! I will be coming up with few more interesting articles on Linux, till then stay tuned to TecMint.com and don’t forget to add your valuable comments.

Hi, Thanks for the configuration, I installed it on Ubuntu 18.04, all is working fine with proxy and SARG reporting.

But there is some issue in my SARG reports, the “download” report option is not displaying along with other options (like Top sites, Sites & Users, and Denied accesses).

Please help me…

Hi Ravi Saive, excellent article.

Can you recommend some similar reporting systems compatible with squid and Debian 10?

Thanks in advance.

Alejandro

@Alejandro,

Give me some time, I will update this article to support the latest Debian releases…

@Ravi thank for answer me.

Can we set sarg to skip some site or some keyword?

Hi,

After completing all steps when I’m trying to run:

It is showing a command not found. Please help me out.

@Pravin,

Make sure you have installed Sarg as explained in this article…

After installing sarg, while trying to run it as

sarg -x Iam getting an error:Can you help me with regards to this?

@Subhomay,

This is the most common problem when you are connecting to a Linux system remotely. Just disable the locale environment variable forwarding in your SSH configuration.

Find the following line and add a

#sign at the beginning to comment it out.Restart the SSH server and try to run the sarg command again…

Hi Tuem, can you help me or show me your squid.conf specifically on how to setup squid as transparent server in Centos 7/8 ( I am using Centos 8) but when I try to add the line # http_port 3128 transparent, or http_port 3128 intercept, then restart my squid server, it encounters an error on this line. Please help. Thanks in advance.

hi,

I would like to change the period but I don’t know where it changes.

Hello People

Please, I would like to setup this feature in my Suse Server, does this possible?

@Juan,

Yes, you can setup Sarg Squid Analyzer in Suse Linux, just follow the instructions..

Hello Folks,

I´d like to know the procedure to setup this tool in Suse!!!

Thanks a lot for your help and support.

@Juan,

Same instructions also works on Suse Linux, give a try and see.

Hi, why my “denied accesses” is empty and not present when I’m accessing the http://x.x.x.x/squid-reports?

I have configured sarg in Linux, still it is not generating the report.

@Fentahun,

Could you tell me what error you getting while generating reports?

Hi,

I’ve finished installing SARG but it displays a list of directory instead of the default index page of SARG and from the URL on the browser localhost/squid-reports this appears

@Ram,

Have you generated squid report using the following command.

I got this error after configuring SARG.

Please help.

Hi Thank You very much , it is really interest and useful article. Is it possible to set the login page to access squid-reports? or change URL address to some thing else with out using 10.93.2.1/squid-reports eg 10.93.2.1/reports-sarg/

Thank You

@Thisara,

Yes you can password protect squid report page with Apache Password Protect Directory with .htaccess.

Hi Ravi,

Thanks for your guide, but i have some problem with my Squid. I use Squid 3.5.20 on Centos7 as transparent proxy. But my squid can’t show domain name of destination server like you. So my reports in top Sites are all ip address like xxx.xxx.xxx.xxx:443 for HTTPS conenction. How can i fix it ?

Thanks a lot !

@Tuem,

I have not checked with CentOS 7, give me some time to check with CentOS 7.

@Ravi,

Thanks for your reply !

Could i take a look at your Squid.conf in this guide ?

I hope that i will find out something :)

Thanks again .

@Tuem,

Sorry, but I don’t have right now, we no more using squid for this purpose..

Thanks alot for your help :)

Hello Ravi,

I’ve already send you a message on facebook, but you haven’t replied yet…

Is there a way to implement the SARG-Tool to my own website or edit the

design?

Thanks in advance!

Lg

@SuperMario,

Yes, you can implement SARG, but you won’t edit its design, unless you know some coding skills..

Thanks for your fast answer!

Can you give me a guide, what i have to do? I didn’t find something helpfull yet..

It’s not necessary to change the design, if i can implement it ;)

@SuperMario,

You want to implement SARG for Apache website? but why? why not go for GoAccess?

@Ravi Saive

There is the same problem. I’m not able to implement this tool on my website.

It generates it’s own page…

@SuperMario,

Do you have squid running? if yes, just add the location of squid log in Sarg configuration and restart squid to see the reports..

I need the report for the admin of a company, that is my problem…

i make a website to implement such reports and to represent it simple…

Hi Ravi

Thanks for for this great article.

I was wondering if SARG can be installed on seperate linux box where I have management tools installed, cause I have a old installed and configured squid server running and don’t want to do any installation on that box.

Thanks,

Basid.

@Basid,

No not possible, sarg only can be installed on same server where squid is running, or else you can do one thing, you can mount the remote logs directory under different machine and install there sarg to analyze logs..

Is there any opensource tool for applying acl in squid

@Abhishek,

There isn’t any such ACL tool, but you can use ACL rules to in Squid as stated here: https://www.tecmint.com/secure-files-using-acls-in-linux/

How to fix sarg record user, I do follow like this but it not record user and record only this computer. Please help me too. http://prntscr.com/d2exvn

hi there, i wonder if sarg can be used in integration with mikrotik?

@Budi,

I don’t think sarg will work with mikrotik, never tried it so far and really don’t have any clue about it..sorry..

Hello there. Thank you for the great tutorial. i am trying to install SARG on Centos 7 and ran to the problem below when trying to run make install

[root@localhost sarg-2.3.10]# make install

cd po ; make install

make[1]: Entering directory `/home/squid/sarg-2.3.10/po’

*** error: gettext infrastructure mismatch: using a Makefile.in.in from gettext version 0.18 but the autoconf macros are from gettext version 0.19

make[1]: *** [check-macro-version] Error 1

make[1]: Leaving directory `/home/squid/sarg-2.3.10/po’

make: *** [install-po] Error 2

i have downloaded and replaced the make.in.in file in “/usr/share/gettext/po/” with a version 0.19 and also the make.in.in in “/home/squid/sarg-2.3.10/po” but i still get the same error

someone please help

@Rangchisi,

Please visit the following sarg official support forum link, here they’ve discussed about the same sarg compile error, might you will find solution here..

https://sourceforge.net/p/sarg/discussion/363374/thread/e4477b89/

@Rangachisi..

[root@localhost sarg-2.3.10]# make install

cd po ; make install

make[1]: Entering directory `/home/squid/sarg-2.3.10/po’

*** error: gettext infrastructure mismatch: using a Makefile.in.in from gettext version 0.18 but the autoconf macros are from gettext version 0.19

make[1]: *** [check-macro-version] Error 1

make[1]: Leaving directory `/home/squid/sarg-2.3.10/po’

make: *** [install-po] Error 2

So that there is this error at compile time :

Access the po folder SARG :

[root@localhost sarg-2.3.10]# cd po

[root@localhost po]# pwd

/home/squid/sarg-2.3.10/po

Then edit :

[root@localhost po]# vim Makefile.in.in

Look for the line :

GETTEXT_MACRO_VERSION = 0.18

Put like this:

GETTEXT_MACRO_VERSION = 0.19

Return to previous directory to start the compilation :

[root@localhost po]# cd ..

[root@localhost sarg-2.3.10]# ./configure

[root@localhost sarg-2.3.10]# make

[root@localhost sarg-2.3.10]# make install

Now Successful Installation Done.

Now edit your sarg.conf as you want….

OR

Update gettext verision 0.18 to 0.19

Check your gettext version- # gettext – -version

Hi Ravi,

I have just been asked to pull a squid report for a date that has passed, now my challenge at the moment is that I haven’t been able to configure sarg to generate auto report I manually run the sarg -x command….the date in question is on a weekend which i normally dont generate any reports as we dont usually have users in the office.

Will it be possible to generate such a report?

@JB,

If you have logs in place for all previous dates, you can manually run the sarg command to generate report for that date. To be fact, I really don’t know how we can achieve this, but I am sure there is a way, just read the man pages of sarg, you will have some idea..

Hi Ravi,

Once again thank you so much for getting me up and running with SARG.

I have been using it for a while now and we accessing reports. Now a new challenge for me as came up, a few of our users have complained that they can not access the internet outside of the office i.e their wfi at home or public wifi. Does this maybe have something to do with the access list in squid? whats the best way to set up the acl so that users can access the internet without having to modify internet settings all the time?

Regards

JB

@JB,

Thanks for appreciating our work and suppot, yes this may be due to wrong ACL configured in squid access list, you need to check your squid acl entires, I suggest you to go through this article might this will help you https://www.tecmint.com/configure-squid-server-in-linux/

Hi Ravi,

Thank you so much now my squid proxy is up and running and I can generate reports.

Just a quick question, in the user reports under access sites,it shows localhost 26143 then its says denied.

Do you maybe know what does that link to?

@JB,

Nothing to worry, it’s just a total bandwidth of request comes to localhost, but got access denied due to security…

To get sarg to compile without the error: “gettext version 0.18 but the autoconf macros are from gettext version 0.19” I had to edit the configure file, search for 0.19 and change that to 0.18. After that, running make install worked fine.

@Nick,

Thanks for updating about the gettext version problem, and I am glad that you’ve found a way to fix that, hope it will help others too..

Hi,

I followed the steps given but still cant generate reports using the browser.

Please assist

@Rabbit,

Any error you seeing on the browser? or could you check logs for any errors?

Hi Ravi,

Thank you for your prompt response.

Yes Im seeing the ERR_CONNECTION_REFUSED error.

when i run sarg -x in the command line this is the output i get:

SARG: sarg version: 2.3.6 Arp-21-2013

SARG: Loading User table: /etc/sarg/usertab

SARG: Reading access log file: /var/log/squid3/access.log

SARG: Records read: 1505, written: 1505, excluded: 0

SARG: Squid log format

SARG: Period: 14 Mar 2016

SARG: Sorting log /tmp/sarg/10_0_xx_xxx.user_unsort

SARG: Making file: /tmp/sarg/10_0_xx_xxx

SARG: Sorting file: /tmp/sarg/10_0_xx_xxx.utmp

SARG: Making report: 10.0.xx.xxx

SARG: Making index.html

SARG: Purging temporary file sarg-general

SARG: End

P.S I am a newbie in Linux

@JB,

That means something is blocking in the firewall, the connection refused generally occurs when desired port not listening or its blocked on the server.

Hi Ravi,

Thank you again after double checking everything I realize that i made one error, i didnt install apache, so after running apt-get install apache2 commands and rebooting the linux machine I was able to access the web report.

now one more challenge, is how do I make sarg generate report hourly? Does it have a specific time or can i edit it myself?

Once again thanks a million for your help.

@JB,

To automate the process of generating sarg report on hourly basis add the following entry to crontab file.

Hi Ravi,

I have pointed 5 users to the proxy, when I run sudo sarg -x i can see the logs but when i get the sarg web access i don’t see any of their history.

is there anything that I maybe need to add in the sarg.conf file for it to work.

I did add the * */1 * * * /usr/local/bin/sarg -x in crontabe about 2 hours ago but i still don’t have any reports

@JB,

Have you added correct location to your squid log file in sarg.conf file? could you share the filename of your squid access log file?

finally got today’s report,

I have added this line in the crontab: * */1 * * * /usr/local/bin/sarg -x

and i will check after an hour to see if i will have a new report generated

Will update you again after I have checked.

@JB,

I already mentioned in the article and also I replied in comments to add that line in crontab to generate hourly reports..

@JB,

I’ve updated the article with the most recent version of Sarg (i.e. 2.3.10) and also included articles that needed to be setup before installing sarg to generate reports properly…..

when i run sarg -z this is the output i get:

sarg-2.3.6 Arp-21-2013

http://sarg.sourceforge.net

proxy-admin@ubuntu-Server:~$ sudo sarg -z

SARG: TAG: access_log /var/log/squid3/access.log

SARG: TAG: title “Rekord Squid User Access Reports”

SARG: TAG: font_face Tahoma,Verdana,Arial

SARG: TAG: header_color darkblue

SARG: TAG: header_bgcolor blanchedalmond

SARG: TAG: font_size 9px

SARG: TAG: background_color white

SARG: TAG: text_color #000000

SARG: TAG: text_bgcolor lavender

SARG: TAG: title_color color

SARG: TAG: temporary_dir /tmp

SARG: TAG: output_dir /var/www/html/squid-reports

SARG: TAG: output_dir /var/www/squid-report

SARG: TAG: resolve_ip no

SARG: TAG: user_ip no

SARG: TAG: topuser_sort_field BYTES reverse

SARG: TAG: user_sort_field BYTES reverse

SARG: TAG: exclude_users /etc/sarg/exclude_users

SARG: TAG: exclude_hosts /etc/sarg/exclude_hosts

SARG: TAG: date_format e

SARG: TAG: lastlog 0

SARG: TAG: remove_temp_files yes

SARG: TAG: index yes

SARG: TAG: index_tree file

SARG: TAG: overwrite_report yes

SARG: TAG: records_without_userid ip

SARG: TAG: use_comma yes

SARG: TAG: mail_utility mailx

SARG: TAG: topsites_num 200

SARG: TAG: topsites_sort_order CONNECT D

SARG: TAG: index_sort_order D

SARG: TAG: exclude_codes /etc/sarg/exclude_codes

SARG: TAG: max_elapsed 28800000

SARG: TAG: report_type topusers topsites sites_users users_sites date_time denied auth_failures site_user_time_date downloads

SARG: TAG: usertab /etc/sarg/usertab

SARG: TAG: long_url no

SARG: TAG: date_time_by bytes

SARG: TAG: charset Latin1

SARG: TAG: show_successful_message no

SARG: TAG: show_read_statistics no

SARG: TAG: topuser_fields NUM DATE_TIME USERID CONNECT BYTES %BYTES IN-CACHE-OUT USED_TIME MILISEC %TIME TOTAL AVERAGE

SARG: TAG: user_report_fields CONNECT BYTES %BYTES IN-CACHE-OUT USED_TIME MILISEC %TIME TOTAL AVERAGE

SARG: TAG: topuser_num 0

SARG: TAG: www_document_root /var/www/html

SARG: TAG: download_suffix “zip,arj,bzip,gz,ace,doc,iso,adt,bin,cab,com,dot,drv$,lha,lzh,mdb,mso,ppt,rtf,src,shs,sys,exe,dll,mp3,avi,mpg,mpeg”

SARG: (info) date=16/03/2016

SARG: (info) period=16 Mar 2016

SARG: (info) outdirname=/var/www/squid-report/16Mar2016-16Mar2016

SARG: (info) Dansguardian report not produced because no dansguardian configuration file was provided

SARG: (info) No redirector logs provided to produce that kind of report

SARG: (info) No downloaded files to report

SARG: (info) Authentication failures report not produced because it is empty

SARG: (info) Redirector report not generated because it is empty

proxy-admin@ubuntu-Server:~$

@JB,

Does you read the last 5 lines – it’s clearing saying that the reports are not generating due to empty log files. Please check your Squid logs file size and verify the content of file using cat command and also make sure to check your dansguardian configuration.

Hi Ravi,

Me again, I have tried the last steps to generate sarg reports but still cant, the only report i see was generated yesterday afternoon but since then i cant generate any report.

Please assist don’t know what to do next :(

@JB,

Does your cron ran successfully? Please check the /var/log/cron logs for any errors or you should try to run manually that command and see if any reports generating or not..

my squid access file in the sarg.conf is access_log /var/log/squid3/access.log

@JB,

Thanks for sharing the information, could you also possible to give access to your server so that I can resolve the issue..

”

I’ve updated the article with the most recent version of Sarg (i.e. 2.3.10) and also included articles that needed to be setup before installing sarg to generate reports properly…..”

may I please get access or link to the update article and sarg version?

Regards

@JB,

I updated this current article to most recent version of sarg, just go through the article, you will know the difference.

Hi,

Thank you for the such nice article. I am having problem from last few days that SARG folder created in /tmp folder as per the script in cron job, but didnot update index.html and also not automatically delete SARG folder from /tmp. It was working fine before. The thing i figured out that SARG tool is unable to read large access.log file. When i run the script in debug mode, it shows an error that “SARG: line too long in /var/logs/access.log” and when there are less entries in access.log file SARG tool created reports successfullly.

Any idea how to enable SARG to read large access.log file ?

Regards,

Sarfraz

Hi Ravi,

Great tutorial, help me getting sarg working :)

Been trying for days to auto-generate the report which doesn’t work for me.

Manually doing sarg -x – but of course I work get a constant supply of daily reports at same time.

Have also tried setting up weekly, monthly reports but cant get this to work.

Can you advise how I can generate these (daily,weekly & monthly) without overriding existing data?

I’ve been using this script, but happy to start from scratch as doesn’t generate anything.

[code]

#!/bin/sh

# TEMP Files

TMPFILE=/tmp/sarg-reports.$RANDOM

ERRORS=”${TMPFILE}.errors”

TODAY=$(date –date “today” +%d/%m/%Y)

YESTERDAY=$(date –date “1 day ago” +%d/%m/%Y)

WEEKAGO=$(date –date “1 week ago” +%d/%m/%Y)

MONTHAGO=$(date –date “1 month ago” +01/%m/%Y)-$(date –date “1 month ago” +31/%m/%Y)

#This is for sarg daily report

#/usr/local/bin/sarg -f /usr/local/etc/sarg.conf -d $YESTERDAY >$ERRORS 2>&1

/usr/local/bin/sarg -f /usr/local/etc/sarg.conf -d “${YESTERDAY}-${TODAY}” > “${ERRORS}” 2>&1

#This if for sarg weekly report

#/usr/local/bin/sarg -f /usr/local/etc/sarg.conf -d $WEEKAGO >$ERRORS 2>&1

#/usr/local/bin/sarg -f /usr/local/etc/sarg.conf -d “${WEEKAGO}-${TODAY}” > “${ERRORS}” 2>&1

#This if for sarg monthly report

#/usr/local/bin/sarg -f /usr/local/etc/sarg.conf -x -z -d $MONTHAGO >$ERRORS 2>&1

#/usr/local/bin/sarg -f /usr/local/etc/sarg.conf -x -z -d ${MONTHAGO}-${TODAY}” > “${ERRORS}” 2>&1

[/code]

Thx

after editing the sarg.conf file as you mentioned, it gives error after ‘sarg -x’

SARG: Cannot set the locale LC_ALL to the environment variable.

Hi, I found the solution. I forgot that I was connecting remotely . Different locales on the ssh server and client was causing the problem. So I Edit /etc/ssh/ssh_config in my local machine and comment out SendEnv LANG LC_* line. now it works. Hope this will help someone.

@Thusitha,

Thanks for tip, hope so it will be helpful to others…

I direct all internet traffic through Squid3 proxy and run sarg reports everyday. Sarg reports is always showing 20% less usage than my ISP which is Telstra Bigpond. What are the additional MBytes that are unaccounted for?

Thanks Ravi. Great post.But I am bit confused about authentication of html page.Can you help me how to add a user with password for sarg output-report page.

@Nirghum,

You mean password protect html page with user credentials?

How to Showing USER Name in SARG reports , IP Showing already

please help

Thanks

Deepak

Thank you very much , it helped me a lot .

Starting httpd: (98)Address already in use: make_sock: could not bind to address

Getting above error while starting httpd service as squid also using same port 80

@Ali,

You can’t use same port for two different services..please change either..or use any one..

@ravi I am waiting you your response is there any progress beside you

@Tewelde,

Please give me a day or 2, will update you by Monday….

thank you @RAVI for your great effort I will wait you

Finally solved from this site

http://www.linuxquestions.org/questions/linux-networking-3/sarg-not-working-on-browser-even-httpd-service-is-working-4175426851/

@Tewelde,

That’s good to hear, can you tell us what’s the problem and and how you solved, so that it will help other users..

I have only correct the full path in the document root then when i try to access by IP address it works but when I try to browse Ip address/sarg it dosnt work

DocumentRoot “/var/www/sarg”

Options FollowSymLinks

AllowOverride All

AuthType Basic

AuthName “Please enter Valid password to access sarg”

AuthUserFile /etc/apache2/passwd

Require valid-user

Options Indexes FollowSymLinks MultiViews

AllowOverride All

Order allow,deny

allow from all

Order allow,deny

allow from all

ScriptAlias /cgi-bin/ /usr/lib/cgi-bin/

AllowOverride all

Options +ExecCGI -MultiViews +SymLinksIfOwnerMatch

Order allow,deny

Allow from all

it works fine for me but now the only problem is it doesn’t generate report automatically?

@Tewelde,

Have you added correct path to your squid access log file in sarg.conf file? are the logs are generating properly? is the path exists? please verify all these…

i have installed sarg-squid analysis under debian based on this instruction but finally i can’t browse with in browser it gives me an error

Not Found

The requested URL /squid-reports was not found on this server.

Apache/2.2.22 (Debian) Server at 10.*.*.* Port 80

and my appache error log is http://paste.ubuntu.com/10385223/ and sarg conf is http://paste.ubuntu.com/10385749/

please help me i realy need it

@Tewelde,

It’s due to wrong Apache document directory configuration in sarg.conf file, it should be ‘/var/www’ for debian based distros, not /var/www/html..Correct the path in the sarg.conf file and try agian…let me know if any errors..

Dear Ravi,

i have already try but nothing change still the error is the same

Not Found

The requested URL /sarg was not found on this server.

Apache/2.2.22 (Debian) Server at 10.*.*.* Port 80

i have try to correct document directory in appache even it is not working http://paste.ubuntu.com/10402389/

and sarg.conf configuration n look like this http://paste.ubuntu.com/10402413/

@tewelde,

Can you please tell us on which Debian version you’re trying? I will try myself let’s see how things works..

i am using

#lsb_release -a

No LSB modules are available.

Distributor ID: Debian

Description: Debian GNU/Linux 7.1 (wheezy)

Release: 7.1

Codename: wheezy

@tewelde,

Thanks for the information, I will test it myself and will update you..give me a day or 2..

Sorry but do I need to enable Apache service in Squid server in order to view the report? I have configured accordingly but I cannot view the report. Please advise.

@LeeYY,

Yes, you must have working Apache service to view reports..

Hi,

Tested OK. Thank you very much!

Hi Ravi,

I have added “* * 1 * * /usr/local/bin/sarg -x” in crontab -e, but the report is not generated automatically. What will be the possibility?

Hello Ravi,

I install SARG and everything work fine , I have the report each hour and I could access through the web page of these reports, however , I have always the (Top sites and Sites & Users and Downloads) even in my sarg.conf I have this line:

report_type topusers topsites sites_users users_sites date_time denied auth_failures site_user_time_date downloads

I tried to access to a denied website and even try to access to my website with wrong credentials.

any ideas ? thanks in advance.

hi i configured sarg following your config am using ubuntu 14.04 and runing zentyal proxy with squid3, when i run the sarg -x command i get the below errors

SARG: Init

SARG: Loading configuration from /etc/sarg/sarg.conf

SARG: Unknown option resolve_ip

SARG: Loading exclude host file from: /etc/sarg/exclude_hosts

SARG: Loading exclude file from: /etc/sarg/exclude_users

SARG: Parameters:

SARG: Hostname or IP address (-a) =

SARG: Useragent log (-b) =

SARG: Exclude file (-c) = /etc/sarg/exclude_hosts

SARG: Date from-until (-d) =

SARG: Email address to send reports (-e) =

SARG: Config file (-f) = /etc/sarg/sarg.conf

SARG: Date format (-g) = Europe (dd/mm/yyyy)

SARG: IP report (-i) = No

SARG: Keep temporary files (-k) = No

SARG: Input log (-l) = /var/log/squid3/access.log

SARG: Resolve IP Address (-n) = No

SARG: Output dir (-o) = /var/lib/sarg/

SARG: Use Ip Address instead of userid (-p) = No

SARG: Accessed site (-s) =

SARG: Time (-t) =

SARG: User (-u) =

SARG: Temporary dir (-w) = /tmp/sarg

SARG: Debug messages (-x) = Yes

SARG: Process messages (-z) = No

SARG: Previous reports to keep (–lastlog) = 0

SARG:

SARG: sarg version: 2.3.6 Arp-21-2013

SARG: Loading User table: /etc/sarg/usertab

SARG: Reading access log file: /var/log/squid3/access.log

SARG: Records read: 4699, written: 4699, excluded: 0

SARG: Squid log format

SARG: Period: 28 Nov 2014

SARG: Sorting log /tmp/sarg/10_100_100_117.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_117

SARG: Sorting log /tmp/sarg/10_100_100_69.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_69

SARG: Sorting log /tmp/sarg/10_100_100_57.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_57

SARG: Sorting log /tmp/sarg/10_100_100_51.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_51

SARG: Sorting log /tmp/sarg/10_100_100_94.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_94

SARG: Sorting log /tmp/sarg/10_100_100_100.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_100

SARG: Sorting log /tmp/sarg/10_100_100_76.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_76

SARG: Sorting log /tmp/sarg/10_100_100_120.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_120

SARG: Sorting log /tmp/sarg/10_100_100_78.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_78

SARG: Sorting log /tmp/sarg/10_100_100_53.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_53

SARG: Sorting log /tmp/sarg/10_100_100_75.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_75

SARG: Sorting log /tmp/sarg/10_100_100_83.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_83

SARG: Sorting log /tmp/sarg/10_100_100_80.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_80

SARG: Sorting log /tmp/sarg/10_100_100_65.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_65

SARG: Sorting log /tmp/sarg/10_100_100_61.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_61

SARG: Sorting log /tmp/sarg/10_100_100_85.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_85

SARG: Sorting log /tmp/sarg/10_100_100_84.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_84

SARG: Sorting log /tmp/sarg/10_100_100_121.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_121

SARG: Sorting log /tmp/sarg/10_100_100_74.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_74

SARG: Sorting log /tmp/sarg/10_100_100_50.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_50

SARG: Sorting log /tmp/sarg/10_100_100_91.user_unsort

SARG: Making file: /tmp/sarg/10_100_100_91

SARG: Sorting file: /tmp/sarg/10_100_100_117.utmp

SARG: Making report: 10.100.100.117

SARG: Sorting file: /tmp/sarg/10_100_100_69.utmp

SARG: Making report: 10.100.100.69

SARG: Sorting file: /tmp/sarg/10_100_100_57.utmp

SARG: Making report: 10.100.100.57

SARG: Sorting file: /tmp/sarg/10_100_100_51.utmp

SARG: Making report: 10.100.100.51

SARG: Sorting file: /tmp/sarg/10_100_100_94.utmp

SARG: Making report: 10.100.100.94

SARG: Sorting file: /tmp/sarg/10_100_100_100.utmp

SARG: Making report: 10.100.100.100

SARG: Sorting file: /tmp/sarg/10_100_100_76.utmp

SARG: Making report: 10.100.100.76

SARG: Sorting file: /tmp/sarg/10_100_100_120.utmp

SARG: Making report: 10.100.100.120

SARG: Sorting file: /tmp/sarg/10_100_100_78.utmp

SARG: Making report: 10.100.100.78

SARG: Sorting file: /tmp/sarg/10_100_100_53.utmp

SARG: Making report: 10.100.100.53

SARG: Sorting file: /tmp/sarg/10_100_100_75.utmp

SARG: Making report: 10.100.100.75

SARG: Sorting file: /tmp/sarg/10_100_100_83.utmp

SARG: Making report: 10.100.100.83

SARG: Sorting file: /tmp/sarg/10_100_100_80.utmp

SARG: Making report: 10.100.100.80

SARG: Sorting file: /tmp/sarg/10_100_100_65.utmp

SARG: Making report: 10.100.100.65

SARG: Sorting file: /tmp/sarg/10_100_100_61.utmp

SARG: Making report: 10.100.100.61

SARG: Sorting file: /tmp/sarg/10_100_100_85.utmp

SARG: Making report: 10.100.100.85

SARG: Sorting file: /tmp/sarg/10_100_100_84.utmp

SARG: Making report: 10.100.100.84

SARG: Sorting file: /tmp/sarg/10_100_100_121.utmp

SARG: Making report: 10.100.100.121

SARG: Sorting file: /tmp/sarg/10_100_100_74.utmp

SARG: Making report: 10.100.100.74

SARG: Sorting file: /tmp/sarg/10_100_100_50.utmp

SARG: Making report: 10.100.100.50

SARG: Sorting file: /tmp/sarg/10_100_100_91.utmp

SARG: Making report: 10.100.100.91

SARG: Making index.html

SARG: The directory “/var/lib/sarg/sarg-general” looks like a report directory but doesn’t contain a sarg-date file. You should delete it

SARG: The directory “/var/lib/sarg/sarg-date” looks like a report directory but doesn’t contain a sarg-date file. You should delete it

SARG: The directory “/var/lib/sarg/sarg-sites” looks like a report directory but doesn’t contain a sarg-date file. You should delete it

SARG: The directory “/var/lib/sarg/sarg-users” looks like a report directory but doesn’t contain a sarg-date file. You should delete it

SARG: Purging temporary file sarg-general

SARG: End

No idea man..sorry..

hi Ravi,

i have a question about sarg set up to access squid log file . my circumstances is sarg and squid on the separate server . how to setup sarg to access remote squid access log ?

thank you in advance.

[root@slave ~]# sarg

bash: sarg: command not found

what to do now ?

i followed as per your suggestions only , every thing is installed successfully.

Please replay

sorry i got it

i did not get your point plz give me the solution

Hello,

Can you tell me in which programming language sarg is written?

Thanks

hi ravi,

i have centos 6.4 at x86_64 and got following error while running sarg 2.3.1. Plz help on this

[root@20proxy@pu bin]# sarg -x -z

SARG: Init

SARG: Loading configuration from /etc/sarg/sarg.conf

SARG: TAG: access_log /var/log/squid/access.log

SARG: TAG: output_dir /var/www/html/squid-reports

SARG: TAG: resolve_ip yes

SARG: TAG: date_format e

SARG: TAG: overwrite_report yes

SARG: TAG: mail_utility mail

SARG: TAG: show_successful_message no

SARG: TAG: external_css_file /var/www/sarg/sarg.css

SARG: Parameters:

SARG: Hostname or IP address (-a) =

SARG: Useragent log (-b) =

SARG: Exclude file (-c) =

SARG: Date from-until (-d) =

SARG: Email address to send reports (-e) =

SARG: Config file (-f) = /etc/sarg/sarg.conf

SARG: Date format (-g) = Europe (dd/mm/yyyy)

SARG: IP report (-i) = No

SARG: Input log (-l) = /var/log/squid/access.log

SARG: Resolve IP Address (-n) = Yes

SARG: Output dir (-o) = /var/www/html/squid-reports/

SARG: Use Ip Address instead of userid (-p) = No

SARG: Accessed site (-s) =

SARG: Time (-t) =

SARG: User (-u) =

SARG: Temporary dir (-w) = /tmp/sarg

SARG: Debug messages (-x) = Yes

SARG: Process messages (-z) = Yes

SARG:

SARG: sarg version: 2.3.1 Sep-18-2010

SARG: Reading access log file: /var/log/squid/access.log

SARG: getword loop detected after 255 bytes.41%

SARG: Line=”29/Sep/2014:02:22:39 +0500 10.0.31.114 NONE/400 }▒>�>”�>#dž>HƆ>(dž>▒]7▒6t▒▒ov&▒1▒O▒$▒%▒h|▒▒▒▒▒hU▒jK▒▒w▒&▒▒▒▒$gР▒݀▒▒B▒▒2▒W▒g▒▒▒▒)▒▒▒u,%▒;▒▒l5▒▒e▒▒8)*▒▒NK▒!▒▒C▒rf▒!▒▒▒ģO▒ծ▒b+▒▒$▒▒▒▒Y▒▒ż▒▒▒▒a▒hI▒]7▒6t▒▒ov&▒1▒O▒▒I▒▒▒▒q@ig▒▒C!D▒{▒āll▒▒▒▒}▒

▒▒▒A▒u▒8▒▒͔▒1▒▒▒\▒N▒▒▒▒▒3▒▒;▒!C+▒▒▒▒▒▒Pt>▒▒▒▒▒p”▒VqBK▒▒N▒ԯ▒a▒▒c▒j▒▒%”jĻ▒~

CVՉm▒�>”�>#dž>HƆ>(dž>▒]7▒6t▒▒ov&▒1▒O▒$▒%▒h|▒▒▒▒▒hU▒jK▒▒w▒&▒▒▒▒$gР▒݀▒▒B▒▒2▒W▒g▒▒▒▒)▒▒▒u,%▒;▒▒l5▒▒e▒▒8)*▒▒NK▒!▒▒C▒rf▒!▒▒▒ģO▒ծ▒b+▒▒$▒▒▒▒Y▒▒ż▒▒▒▒a▒hI▒]7▒6t▒▒ov&▒1▒O▒▒I▒▒▒▒q@ig▒▒C!D▒{▒āll▒▒▒▒}▒

▒▒▒A▒u▒8▒▒͔▒1▒▒▒\▒N▒▒▒▒▒3▒▒;▒!C+▒▒▒▒▒▒Pt>▒▒▒▒▒p”▒VqBK▒▒N▒ԯ▒a▒▒c▒j▒▒%”jĻ▒~

CVՉm▒<▒▒6NLũ▒

▒2Pr▒▒0▒wGa▒$▒▒▒"▒▒[▒▒z▒▒y▒▒%▒▒{ፏ▒o

▒▒7▒]\▒T$▒Z▒r|▒~*▒▒r▒▒-▒.▒▒▒h▒N▒▒3d▒▒▒ %BC%8B%91%C3;%0E%CD2%C4k%27E%A3%B4!%7E%10D%B8$5%9B%84%96%F3%E6h%FC%1F%D07%DCa%CE%ACl%1Ce%B4%FEf%BD%5Bkd"

SARG: searching for 'x20'

SARG: getword backtrace:

SARG: 1:sarg() [0x4054b7]

SARG: 2:sarg() [0x405ac9]

SARG: 3:sarg() [0x40ab82]

SARG: 4:/lib64/libc.so.6(__libc_start_main+0xfd) [0x7facdd6e4d5d]

SARG: 5:sarg() [0x4029a9]

SARG: Maybe you have a broken amount of data in your /var/log/squid/access.log file

[root@20proxy@pu bin]# PuTTYPuTTYPuTTYPuTTYPuTTYPuTTYPuTTYPuTT

The error indicating that your squid file has broken data (chunk characters), due to this the file is not processing properly, either remove the chunk data or re-create new log file.

Thanks for your reply, I got your point and found log files are rotating on weekly basis so sarg failed to get all log data from access.log. Now guide me how can i close this rotation and i did not find any logfile_rotation directive in squid.conf and as you said above i made a new access.log file and sarg got success to read the log file but did not write. Plz guide me with commands if needed

squidGuard-1.4-9.el6.x86_64

squid-3.1.23-1.el6.x86_64

Result

[root@20proxy@pu bin]# sarg -x -z

SARG: Init

SARG: Loading configuration from /etc/sarg/sarg.conf

SARG: TAG: access_log /var/log/squid/access.log

SARG: TAG: output_dir /var/www/html/squid-reports

SARG: TAG: resolve_ip yes

SARG: TAG: date_format e

SARG: TAG: overwrite_report yes

SARG: TAG: mail_utility mail

SARG: TAG: show_successful_message no

SARG: TAG: external_css_file /var/www/sarg/sarg.css

SARG: Parameters:

SARG: Hostname or IP address (-a) =

SARG: Useragent log (-b) =

SARG: Exclude file (-c) =

SARG: Date from-until (-d) =

SARG: Email address to send reports (-e) =

SARG: Config file (-f) = /etc/sarg/sarg.conf

SARG: Date format (-g) = Europe (dd/mm/yyyy)

SARG: IP report (-i) = No

SARG: Input log (-l) = /var/log/squid/access.log

SARG: Resolve IP Address (-n) = Yes

SARG: Output dir (-o) = /var/www/html/squid-reports/

SARG: Use Ip Address instead of userid (-p) = No

SARG: Accessed site (-s) =

SARG: Time (-t) =

SARG: User (-u) =

SARG: Temporary dir (-w) = /tmp/sarg

SARG: Debug messages (-x) = Yes

SARG: Process messages (-z) = Yes

SARG:

SARG: sarg version: 2.3.1 Sep-18-2010

SARG: Reading access log file: /var/log/squid/access.log

SARG: Records in file: 31515, reading: 100.00%

SARG: Records read: 31536, written: 0, excluded: 87

SARG: Squid log format

SARG: No records found

SARG: End

how to resolve this sir? please help guid .

root@debian:~# sarg -x -z

SARG: Init

SARG: Loading configuration from /etc/sarg/sarg.conf

SARG: TAG: access_log /var/log/squid3/access.log

SARG: TAG: title “Squid User Access Reports”

SARG: TAG: font_face Tahoma,Verdana,Arial

SARG: TAG: header_color darkblue

SARG: TAG: header_bgcolor blanchedalmond

SARG: TAG: font_size 9px

SARG: TAG: background_color white

SARG: TAG: text_color #000000

SARG: TAG: text_bgcolor lavender

…..

SARG: sarg version: 2.3.6 Arp-21-2013

SARG: Loading User table: /etc/sarg/usertab

SARG: Reading access log file: /var/log/squid3/access.log

SARG: Records read: 85, written: 85, excluded: 0

SARG: Squid log format

SARG: (info) date=02/11/2016

SARG: (info) period=02 Nov 2016

SARG: Period: 02 Nov 2016

SARG: (info) outdirname=/var/lib/sarg/02Nov2016-02Nov2016

SARG: Sorting log /tmp/sarg/192_168_0_200.user_unsort

SARG: Making file: /tmp/sarg/192_168_0_200

SARG: (info) Dansguardian report not produced because no dansguardian configuration file was provided

SARG: (info) No redirector logs provided to produce that kind of report

SARG: (info) No downloaded files to report

SARG: (info) Authentication failures report not produced because it is empty

SARG: (info) Redirector report not generated because it is empty

SARG: Sorting file: /tmp/sarg/192_168_0_200.utmp

SARG: Making report: 192.168.0.200

SARG: Making index.html

Hello,

Can any one provide me the details how to rotate squid log on daily basis? right now it is rotating on weekly basis.

Regards

Santosh

hi Ravi, i already install sarg on my cache server, all process complete well, but i cannot access http://mychaceserverip/squid-reports/index.html the error “(111) Connection refused” can you tell me why this is happen? thanks b4

Is your Port 80 is opened on the iptable firewall? have you tested the link from the local machine using http://localhost/squid-reports/index.html?

File not found /var/log/squid/access.log

May be path to squid log file different in your Linux distribution. Please check and add the correct squid log paths in configuration.

HI,

I have successful install and configure sarg report my question is how can i restricted sarg url example http://10..x.x.x/squid-reports/ etc ..

Regards

Faisal Khan

You can use Apache .htaccess to password protect that directory. Please use our search form to search for apache password protect article.

Did the installation then run the reporting, I can log on to the site and I see the logs with the dates, but when I click on them I get a error.

The site could be temporarily unavailable or too busy. Try again in a few moments.

If you are unable to load any pages, check your computer’s network connection.

If your computer or network is protected by a firewall or proxy, make sure that Firefox is permitted to access the Web.

The installation wend well no problems, but when I go the the reports I can see them on the web page, but

Actually above error resolved by this

http://sourceforge.net/p/sarg/discussion/363374/thread/291b358b/

Thanks

Please help me to resolve following error.

I hv already installed all dependicies above mentioned.

ver 2.3.8

gcc -std=gnu99 -c -I. -DBINDIR=\”/usr/local/bin\” -DSYSCONFDIR=\”/usr/local/etc\” -DFONTDIR=\”/usr/local/share/sarg/fonts\” -DIMAGEDIR=\”/usr/local/share/sarg/images\” -DSARGPHPDIR=\”/var/www/html\” -DLOCALEDIR=\”/usr/local/share/locale\” -DPACKAGE_NAME=\”sarg\” -DPACKAGE_TARNAME=\”sarg\” -DPACKAGE_VERSION=\”2.3.8\” -DPACKAGE_STRING=\”sarg\ 2.3.8\” -DPACKAGE_BUGREPORT=\”\” -DPACKAGE_URL=\”\” -DHAVE_DIRENT_H=1 -DSTDC_HEADERS=1 -DHAVE_SYS_TYPES_H=1 -DHAVE_SYS_STAT_H=1 -DHAVE_STDLIB_H=1 -DHAVE_STRING_H=1 -DHAVE_MEMORY_H=1 -DHAVE_STRINGS_H=1 -DHAVE_INTTYPES_H=1 -DHAVE_STDINT_H=1 -DHAVE_UNISTD_H=1 -DHAVE_STDIO_H=1 -DHAVE_STDLIB_H=1 -DHAVE_STRING_H=1 -DHAVE_STRINGS_H=1 -DHAVE_SYS_TIME_H=1 -DHAVE_TIME_H=1 -DHAVE_UNISTD_H=1 -DHAVE_DIRENT_H=1 -DHAVE_SYS_TYPES_H=1 -DHAVE_SYS_SOCKET_H=1 -DHAVE_NETDB_H=1 -DHAVE_ARPA_INET_H=1 -DHAVE_NETINET_IN_H=1 -DHAVE_SYS_STAT_H=1 -DHAVE_CTYPE_H=1 -DHAVE_ERRNO_H=1 -DHAVE_SYS_RESOURCE_H=1 -DHAVE_SYS_WAIT_H=1 -DHAVE_STDARG_H=1 -DHAVE_INTTYPES_H=1 -DHAVE_LIMITS_H=1 -DHAVE_LOCALE_H=1 -DHAVE_EXECINFO_H=1 -DHAVE_MATH_H=1 -DHAVE_LIBINTL_H=1 -DHAVE_LIBGEN_H=1 -DHAVE_STDBOOL_H=1 -DHAVE_GETOPT_H=1 -DHAVE_FCNTL_H=1 -DHAVE_GD_H=1 -DHAVE_GDFONTL_H=1 -DHAVE_GDFONTT_H=1 -DHAVE_GDFONTS_H=1 -DHAVE_GDFONTMB_H=1 -DHAVE_GDFONTG_H=1 -DHAVE_LDAP_H=1 -DHAVE_ICONV=1 -DICONV_CONST= -DHAVE_ICONV_H=1 -DHAVE_PCRE_H=1 -DENABLE_NLS=1 -DHAVE_GETTEXT=1 -DHAVE_DCGETTEXT=1 -DHAVE_FOPEN64=1 -D_LARGEFILE64_SOURCE=1 -DHAVE_BZERO=1 -DHAVE_BACKTRACE=1 -DHAVE_SYMLINK=1 -DHAVE_LSTAT=1 -DHAVE_GETNAMEINFO=1 -DHAVE_GETADDRINFO=1 -DHAVE_MKSTEMP=1 -DSIZEOF_RLIM_T=8 -DRLIM_STRING=\”%lli\” -g -O2 -Wall -Wno-sign-compare -Wextra -Wno-unused-parameter -Werror=implicit-function-declaration -Werror=format log.c

log.c: In function ‘main’:

log.c:1506: error: format ‘%li’ expects type ‘long int’, but argument 7 has type ‘long long int’

log.c:1513: error: format ‘%li’ expects type ‘long int’, but argument 8 has type ‘long long int’

log.c:1564: error: format ‘%li’ expects type ‘long int’, but argument 2 has type ‘long long int’

make: *** [log.o] Error 1

Can i generate the traffic report by certain IP and within certain period of time for e.g. 1/Apr/2014 to 5/Apr/2015?

Hi

Everything went well, But i can’t view the reports through my browser. Whenever i type http://locahost/squid-reports or http://ipaddress/squid-reports browser simply returning with error message that Not Found

The requested URL /squid-reports was not found on this server.

Apache/2.2.17 (Fedora) Server at 192.168.50.4 Port 80 please help!!

hi ravi,

i got a gud result upto sample output,but i have not been get a access report in web browser.pls help me

Hello friend ..

excellent tutorial

you can help me with this?

to give the command. / configure

gives me the following error:

configure: pcre.h was not found so the regexp won’t be available in the hostalias

and then to make the

# make

gcc -std=gnu99 -c -I. -DBINDIR=\”/usr/local/bin\” -DSYSCONFDIR=\”/usr/local/etc\” -DFONTDIR=\”/usr/local/share/sarg/fonts\” -DIMAGEDIR=\”/usr/local/share/sarg/images\” -DSARGPHPDIR=\”/var/www/html\” -DLOCALEDIR=\”/usr/local/share/locale\” -DPACKAGE_NAME=\”sarg\” -DPACKAGE_TARNAME=\”sarg\” -DPACKAGE_VERSION=\”2.3.8\” -DPACKAGE_STRING=\”sarg\ 2.3.8\” -DPACKAGE_BUGREPORT=\”\” -DPACKAGE_URL=\”\” -DHAVE_DIRENT_H=1 -DSTDC_HEADERS=1 -DHAVE_SYS_TYPES_H=1 -DHAVE_SYS_STAT_H=1 -DHAVE_STDLIB_H=1 -DHAVE_STRING_H=1 -DHAVE_MEMORY_H=1 -DHAVE_STRINGS_H=1 -DHAVE_INTTYPES_H=1 -DHAVE_STDINT_H=1 -DHAVE_UNISTD_H=1 -DHAVE_STDIO_H=1 -DHAVE_STDLIB_H=1 -DHAVE_STRING_H=1 -DHAVE_STRINGS_H=1 -DHAVE_SYS_TIME_H=1 -DHAVE_TIME_H=1 -DHAVE_UNISTD_H=1 -DHAVE_DIRENT_H=1 -DHAVE_SYS_TYPES_H=1 -DHAVE_SYS_SOCKET_H=1 -DHAVE_NETDB_H=1 -DHAVE_ARPA_INET_H=1 -DHAVE_NETINET_IN_H=1 -DHAVE_SYS_STAT_H=1 -DHAVE_CTYPE_H=1 -DHAVE_ERRNO_H=1 -DHAVE_SYS_RESOURCE_H=1 -DHAVE_SYS_WAIT_H=1 -DHAVE_STDARG_H=1 -DHAVE_INTTYPES_H=1 -DHAVE_LIMITS_H=1 -DHAVE_LOCALE_H=1 -DHAVE_EXECINFO_H=1 -DHAVE_MATH_H=1 -DHAVE_LIBINTL_H=1 -DHAVE_LIBGEN_H=1 -DHAVE_STDBOOL_H=1 -DHAVE_GETOPT_H=1 -DHAVE_FCNTL_H=1 -DHAVE_GD_H=1 -DHAVE_GDFONTL_H=1 -DHAVE_GDFONTT_H=1 -DHAVE_GDFONTS_H=1 -DHAVE_GDFONTMB_H=1 -DHAVE_GDFONTG_H=1 -DHAVE_ICONV=1 -DICONV_CONST= -DHAVE_ICONV_H=1 -DENABLE_NLS=1 -DHAVE_GETTEXT=1 -DHAVE_DCGETTEXT=1 -DHAVE_FOPEN64=1 -D_LARGEFILE64_SOURCE=1 -DHAVE_BZERO=1 -DHAVE_BACKTRACE=1 -DHAVE_SYMLINK=1 -DHAVE_LSTAT=1 -DHAVE_GETNAMEINFO=1 -DHAVE_GETADDRINFO=1 -DHAVE_MKSTEMP=1 -DSIZEOF_RLIM_T=8 -DRLIM_STRING=\”%lli\” -g -O2 -Wall -Wno-sign-compare -Wextra -Wno-unused-parameter -Werror=implicit-function-declaration -Werror=format util.c

gcc -std=gnu99 -c -I. -DBINDIR=\”/usr/local/bin\” -DSYSCONFDIR=\”/usr/local/etc\” -DFONTDIR=\”/usr/local/share/sarg/fonts\” -DIMAGEDIR=\”/usr/local/share/sarg/images\” -DSARGPHPDIR=\”/var/www/html\” -DLOCALEDIR=\”/usr/local/share/locale\” -DPACKAGE_NAME=\”sarg\” -DPACKAGE_TARNAME=\”sarg\” -DPACKAGE_VERSION=\”2.3.8\” -DPACKAGE_STRING=\”sarg\ 2.3.8\” -DPACKAGE_BUGREPORT=\”\” -DPACKAGE_URL=\”\” -DHAVE_DIRENT_H=1 -DSTDC_HEADERS=1 -DHAVE_SYS_TYPES_H=1 -DHAVE_SYS_STAT_H=1 -DHAVE_STDLIB_H=1 -DHAVE_STRING_H=1 -DHAVE_MEMORY_H=1 -DHAVE_STRINGS_H=1 -DHAVE_INTTYPES_H=1 -DHAVE_STDINT_H=1 -DHAVE_UNISTD_H=1 -DHAVE_STDIO_H=1 -DHAVE_STDLIB_H=1 -DHAVE_STRING_H=1 -DHAVE_STRINGS_H=1 -DHAVE_SYS_TIME_H=1 -DHAVE_TIME_H=1 -DHAVE_UNISTD_H=1 -DHAVE_DIRENT_H=1 -DHAVE_SYS_TYPES_H=1 -DHAVE_SYS_SOCKET_H=1 -DHAVE_NETDB_H=1 -DHAVE_ARPA_INET_H=1 -DHAVE_NETINET_IN_H=1 -DHAVE_SYS_STAT_H=1 -DHAVE_CTYPE_H=1 -DHAVE_ERRNO_H=1 -DHAVE_SYS_RESOURCE_H=1 -DHAVE_SYS_WAIT_H=1 -DHAVE_STDARG_H=1 -DHAVE_INTTYPES_H=1 -DHAVE_LIMITS_H=1 -DHAVE_LOCALE_H=1 -DHAVE_EXECINFO_H=1 -DHAVE_MATH_H=1 -DHAVE_LIBINTL_H=1 -DHAVE_LIBGEN_H=1 -DHAVE_STDBOOL_H=1 -DHAVE_GETOPT_H=1 -DHAVE_FCNTL_H=1 -DHAVE_GD_H=1 -DHAVE_GDFONTL_H=1 -DHAVE_GDFONTT_H=1 -DHAVE_GDFONTS_H=1 -DHAVE_GDFONTMB_H=1 -DHAVE_GDFONTG_H=1 -DHAVE_ICONV=1 -DICONV_CONST= -DHAVE_ICONV_H=1 -DENABLE_NLS=1 -DHAVE_GETTEXT=1 -DHAVE_DCGETTEXT=1 -DHAVE_FOPEN64=1 -D_LARGEFILE64_SOURCE=1 -DHAVE_BZERO=1 -DHAVE_BACKTRACE=1 -DHAVE_SYMLINK=1 -DHAVE_LSTAT=1 -DHAVE_GETNAMEINFO=1 -DHAVE_GETADDRINFO=1 -DHAVE_MKSTEMP=1 -DSIZEOF_RLIM_T=8 -DRLIM_STRING=\”%lli\” -g -O2 -Wall -Wno-sign-compare -Wextra -Wno-unused-parameter -Werror=implicit-function-declaration -Werror=format log.c

log.c: En la función âmainâ:

log.c:1506: error: el formato â%liâ espera el tipo âlong intâ, pero el argumento 7 es de tipo âlong long intâ

log.c:1513: error: el formato â%liâ espera el tipo âlong intâ, pero el argumento 8 es de tipo âlong long intâ

log.c:1564: error: el formato â%liâ espera el tipo âlong intâ, pero el argumento 2 es de tipo âlong long intâ

make: *** [log.o] Error 1

install pcre package using yum command.

Hi ravi,

Everything went well, But i can’t view the reports through my browser. Whenever i type http://locahost/squid-reports or http://ipaddress/squid-reports browser simply returning with error message that “THE REQUESTED URL COULD NOT BE RETRIEVED” please help!!

Flush ur iptables

Thank you for this tutorial. This is actually a great tool for IT professional, however, after entering the “sarg -x” command I am getting this error.

SARG: sarg version: 2.3.8 Feb-07-2014

SARG: Reading access log file: /var/log/squid/access.log

SARG: getword loop detected after 256 bytes.0%

SARG: Line=”04/Mar/2014:11:25:53 +0800 140157 172.16.0.176 TCP_MISS/302 438 GET”

SARG: Record=”http://ads.cnn.com/event.ng/Type=adxmiss&ClientType=2&ASeg=&AMod=&AOpt=0&AdID=797605&FlightID=560907&TargetID=183732&SiteID=1590&EntityDefResetFlag=0&Segments=DECBHHicHY3JEQBBCAIT4uGJmn9iy-ynq6VUeIZwLiJKyDB4uUktggjmHeLGGunxs7vEsUSytlDazMd1aLZA196guXIy3cXSH7LtOb3BnVRy3g59Z2O8JzFRMz_3cZXHtfKc859yxks239WeetdOjZtD-wAnOCnz&Targets=DECBHHicLZDJFQAhCEMb8sAe6L-xYZnTf0oSogwh18dkovU4A4oGyPJxiUctQIMQOcQAZgdf4C6xPiQGJeNTmuhSZj_EwnSBH3Y4yYap1PqU8nAnpgMPzKaSuq4EuWGo3g42a0kQqDOzPNdA-6I27D7PH3HoWdT0RNUs18D0y3SPV5FTT4jnPSAaMzLnV8JDWhip2U2SKWYmxnpoCdx9ZgqVDxQBSwQ.&Values=DECBHHicJZDJAQAhCAMb4kGQI_Tf2Eb3wQBy224zRhjcDeXWPJKStEShDkPIQE0Je9OWF2toVcXUCJOWWbCclJ8MHKtYJVR7UeSE1bjXY4tckfLEcUVJ-CNMk3rEvJm72kDDKkSQYiracK6IZ6P1juhzyRTVQsvHztG-Z_NewfOOIe_-xUW-q9RZqvK8o44-pFWSv6oP70M6xw..&RawValues=NGUSERID%252C5313de170652160a3c8ef72b2401f964%252CKXID%252Cnqjy664yj%252CTID%252C13939029942996432425196198%252CTIL%252C8363992093935&random=dgAhwyA,bjrkrnNwpWAd&Params.tag.transactionid=13939029942996432425196198&Params.User.UserID=5313de170652160a3c8ef72b2401f964 danilo DIRECT/157.166.224.71 text/html”

SARG: searching for ‘x20’

SARG: getword backtrace:

SARG: 1:sarg() [0x804e57c]

SARG: 2:sarg() [0x804effe]

SARG: 3:sarg() [0x80548a3]

SARG: 4:/lib/libc.so.6(__libc_start_main+0xe6) [0x3fad26]

SARG: 5:sarg() [0x80499c1]

SARG: Maybe you have a broken user ID in your /var/log/squid/access.log file

Can you tell me where did I go wrong? I have followed each and every step in tutorial and it was installed successfully. Until this error came out.

It seems a bug in sarg that failed to read log file, due to some http read warning that cannot be parsed. Which sarg version you using?

Hi ,

I have installed and configured sarg , when try to access i’m getting following error message.

While trying to process the request:

GET /squid-reports/ HTTP/1.0

Host: 192.168.30.6

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8

User-Agent: Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36

Accept-Encoding: gzip,deflate,sdch

Accept-Language: en-US,en;q=0.8

Via: 1.1 localhost.localdomain:80 (squid/2.6.STABLE21)

X-Forwarded-For: 192.168.30.15

Cache-Control: max-age=259200

Connection: keep-alive

Hi!

After i enter “#sudo sarg -x” and it generate the report, but at last sentence it show..

“SARG: Maybe you have a broken time in your /var/www/sarg/ONE-SHOT/sarg-date file”

Is there any problem with my access.log?

And also i can’t find any index.html report inside ONE-SHOT folder, just have IP Address Folder. Is there my sarg option set to wrong way? Please help me, thanks!

Regards,

Raymond Chong

I use also “Lightsquid” – very easy and simple tool for squid log analylize.

Yeah thanks, never heard of such tool ‘Lightsquid’ will surely try it and will provide a detailed article soon.

Hi Ravi

Thanks for your reply. I searched the entire system for the path where the log files for SARG is created. I could not find it.

I have even re-compiled the SARG from source again and installed it. It is still showing me the same error that “SARG: File not found: /var/log/squid/access.log”.

I am installing this service using the root ID. I am not sure on where I am going wrong. Could you please shed some light on this issue and assist to fix it for me.

Looking forward to hearing from you.

Jos

Is squid is installed on the system? and logs are generating at /var/log/squid/access.log? Please check this first, the log file must exist to process SARG to generate reports.

Hi!

Check if it is not in the directory “var/log/squid3/access.log” this depends on the squid package that you installed on your system.

you can run

# find / var/log/squid3/access.log

This command is used to check if a file or directory

Test and tell us how it goes!

I hope I have helped

regards

it really helps us………….thank you very much……..!

I would like to have a module for authentication squid proxy users using a web login form.

When a users tries to access the internet, first it should redirect to web login form,then after authentication only users should access internet…..!

Can any once suggest me how to do this….!

Great , thanks for sharing ! But could you please write a guide how to setup Squid and DNS before setup SARG ? I dont see that tool in tecmint !!!

Yes! the articles are in progress the will appear online in next week.

Hi Ravi

The Sarg installation went well on RHEL6.5. I tried to modify the sarg.conf file as per your instruction. However, when I tried to generate the report by running sarg -x it gives me the error saying “SARG: File not found: /var/log/squid/access.log”.

I also noticed that the output path also does not exist as mentioned?

Is this folders created automatically with the sarg installation? or do we need to create the folders manually?

Looking forward for your response.

Regards

Jos

Please add correct location of your squid access log file path in sarg.conf file.